Concurrency vs Parallelism, often perceived as analogous terms in the technology sector, actually possess distinct characteristics that differentiate them significantly. This article aims to meticulously dissect the subtle yet crucial differences between concurrency and parallelism. Employing a practical illustration, we will demonstrate how each approach can be effectively utilized in the context of web scraping, thus providing a deeper understanding of their unique capabilities and applications.

1. What Is Concurrency?

Concurrency involves managing multiple sequences of operations simultaneously. This is a common scenario in operating systems where numerous process threads run concurrently. These threads often interact through methods like shared memory or message passing. A key aspect of concurrency is resource sharing, which, while efficient, can sometimes lead to issues like deadlocks or resource starvation, where processes get stuck or are unable to access necessary resources.

The beauty of concurrency lies in its ability to orchestrate process execution, allocate memory smartly, and schedule tasks effectively to boost overall throughput.

2. Concurrency in Operating Systems

Concurrency in operating systems is a cornerstone of modern computing, reflecting the system’s ability to juggle multiple operations or processes at once. In an era where speed and efficiency are critical, concurrency is a game-changer. It allows an operating system to multitask, handling several activities concurrently, thereby elevating the system’s throughput and optimizing resource use.

2.1 How Concurrency Works

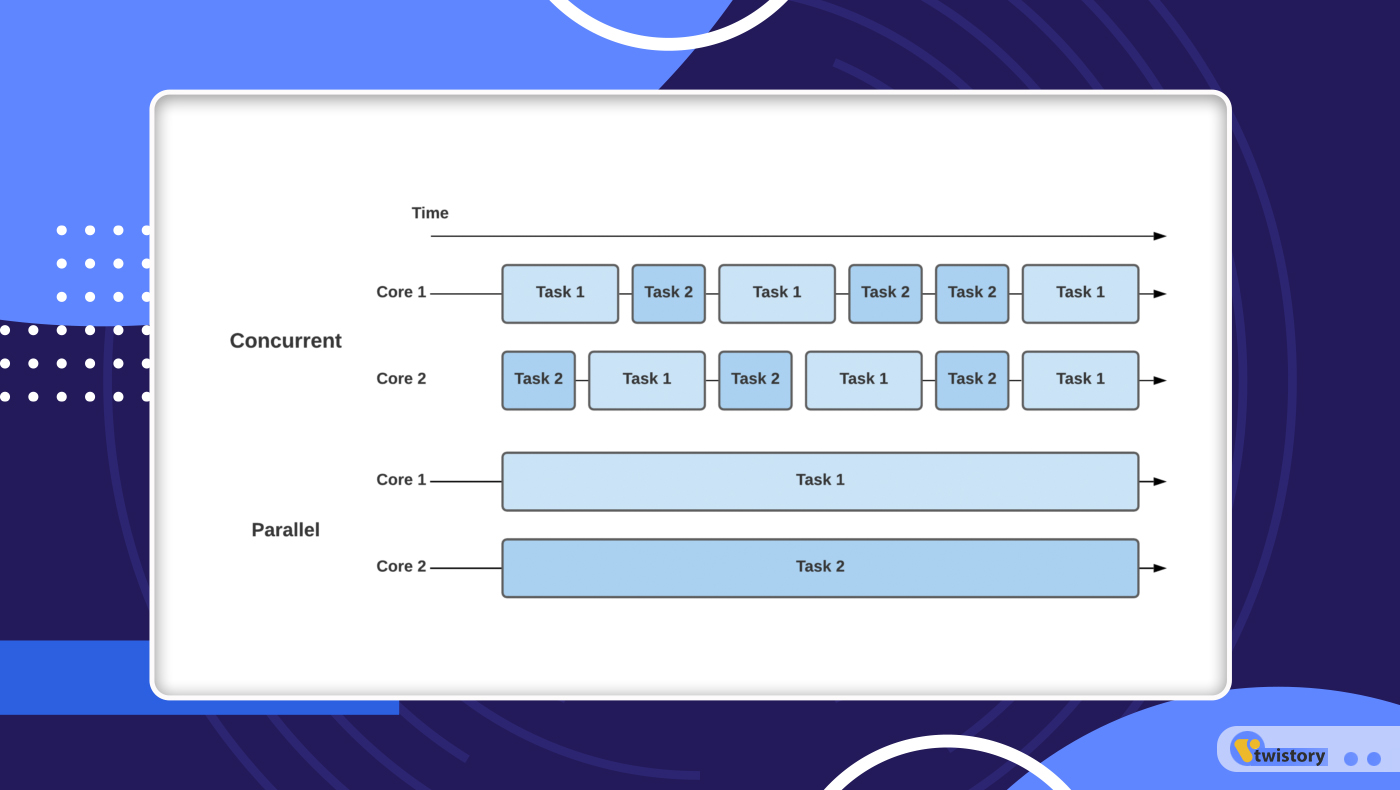

At its core, concurrency is about enabling multiple parties to access shared resources, necessitating some form of communication among them. It works by running a thread until it achieves a meaningful milestone, then pausing this thread to give way to another. This process continues, with the system switching between threads as each makes significant progress.

2.2 Real-world Examples of Concurrency in Operating Systems

- Multithreaded Web Servers Web servers exemplify concurrency through their ability to manage numerous client requests simultaneously. By employing multithreading, these servers can handle multiple requests at the same time, boosting performance and responsiveness.

- Concurrent Databases Databases, pivotal in many applications, rely on concurrency to allow multiple users to interact with the same data concurrently. They employ techniques like locking mechanisms and transaction isolation to maintain a safe and efficient environment for simultaneous access.

- Parallel Computing Parallel computing takes concurrency to another level, breaking down large problems into smaller chunks that can be processed simultaneously across multiple processors or computers. This approach is invaluable in fields like scientific computing, data analysis, and machine learning, where parallel processing can drastically enhance the efficiency of complex algorithms.

3. What Is Parallelism?

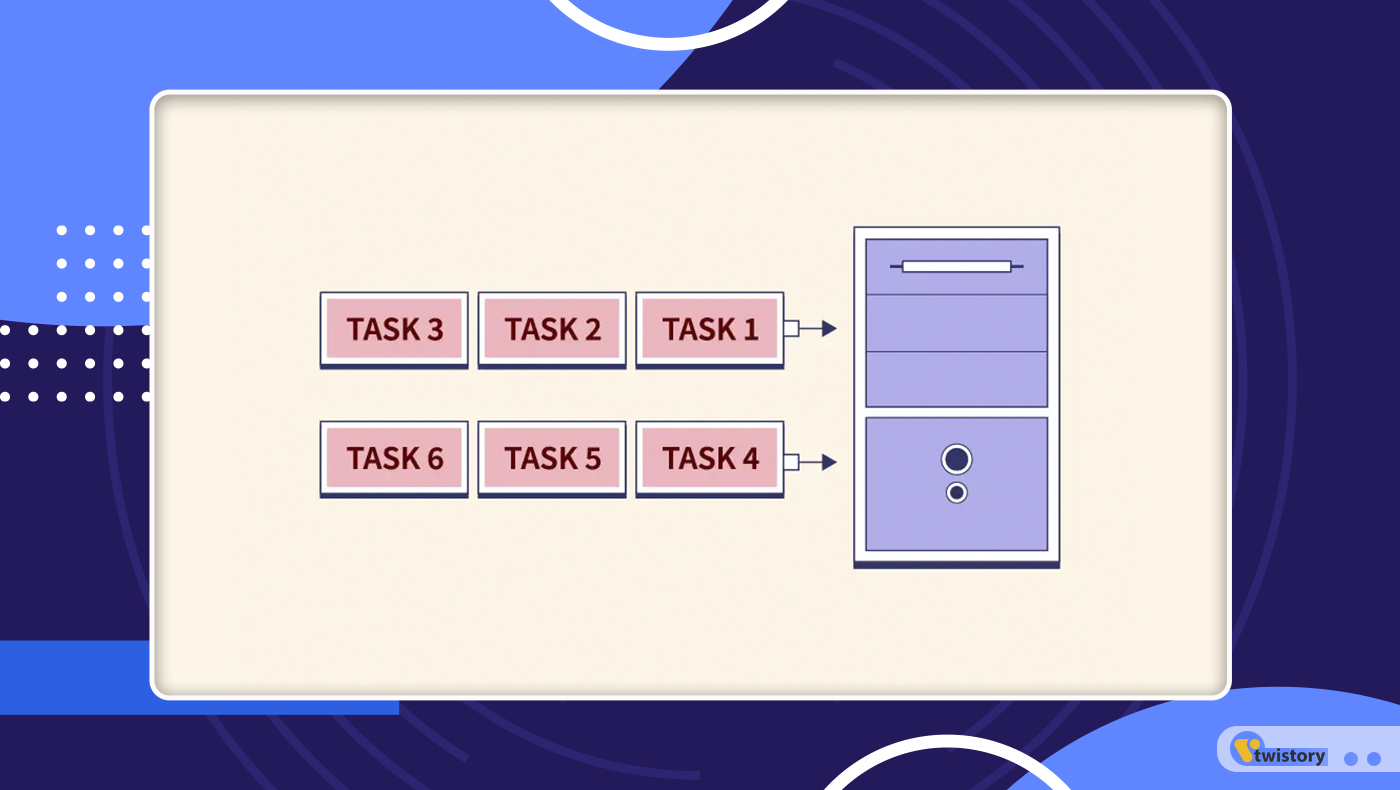

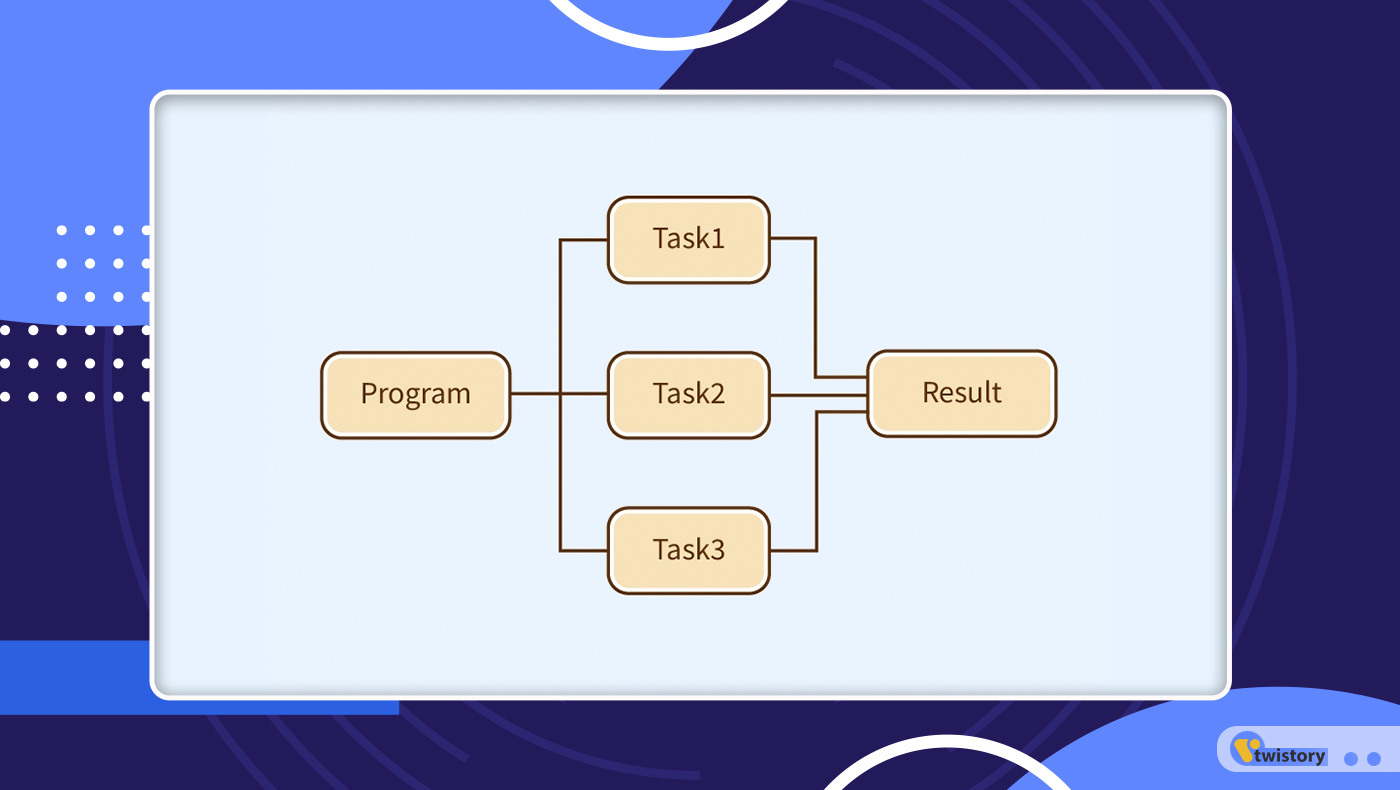

Parallelism in technology refers to the capability of running multiple independent parts of a program simultaneously. Unlike tasks that run concurrently, which may not actually be executing at the same moment, parallel tasks can truly run at the same time. This can happen on separate cores of the same processor, different processors, or even across multiple computers in a distributed system. With the growing need for faster computing in real-world applications, the use of parallelism is becoming increasingly prevalent and cost-effective.

4. Parallelism in Operating Systems

Parallelism in operating systems is all about the system’s ability to carry out several operations or tasks at the same time. This is made possible by using multi-core processor architectures. It’s different from concurrency, where multiple tasks are interleaved but not necessarily executed simultaneously. Parallelism focuses on boosting computational speed and efficiency by running processes side by side.

4.1 How Parallelism Works

In parallelism, you’ll find CPU and I/O activities of one process happening alongside those of another. This is different from concurrency, which speeds things up by overlapping the I/O operations of one process with the CPU processing of another. Essentially, parallelism is like having several workers on different parts of a project at the same time, whereas concurrency is more like a single worker switching between different tasks.

4.2 Real-world Examples of Parallelism in Operating Systems

- Distributed Systems: A classic example of parallel systems is in distributed systems, where tasks are spread out across many machines or processors. This includes tasks on machines that are close together and connected by a local network or those far apart connected via a wide area network.

- Web Servers and Databases: These systems often tap into parallelism to manage numerous requests or transactions all at once. This not only makes them more responsive but also helps in better utilization of resources.

- Scientific Computing: In fields like scientific simulations and data analytics, parallelism is a game-changer. It allows complex computational tasks to be carried out across multiple processors or machines, leading to quicker processing and enhanced performance.

- Multicore Processors: The emergence of multicore processors in today’s computers is a direct nod to parallelism. With multiple cores working on tasks at the same time, performance improves significantly, especially for tasks that can be done in parallel.

5. Comparing Concurrency and Parallelism

In this section, you’ll explore the key differences between concurrency and parallelism in operating systems. It’s fascinating to see how these two concepts differ and contribute to system performance.

5.1 Commonalities and Differences Between Concurrency vs Parallelism

Commonalities:

- Both concurrency and parallelism deal with executing multiple tasks or processes.

- They play crucial roles in enhancing system performance and optimizing resource usage.

- You’ll find them in various applications, from web servers to scientific simulations and multimedia processing.

Differences:

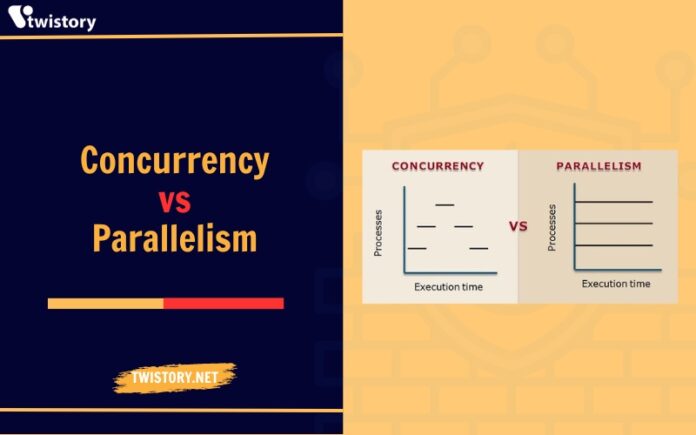

Approach to Handling Multiple Computations:

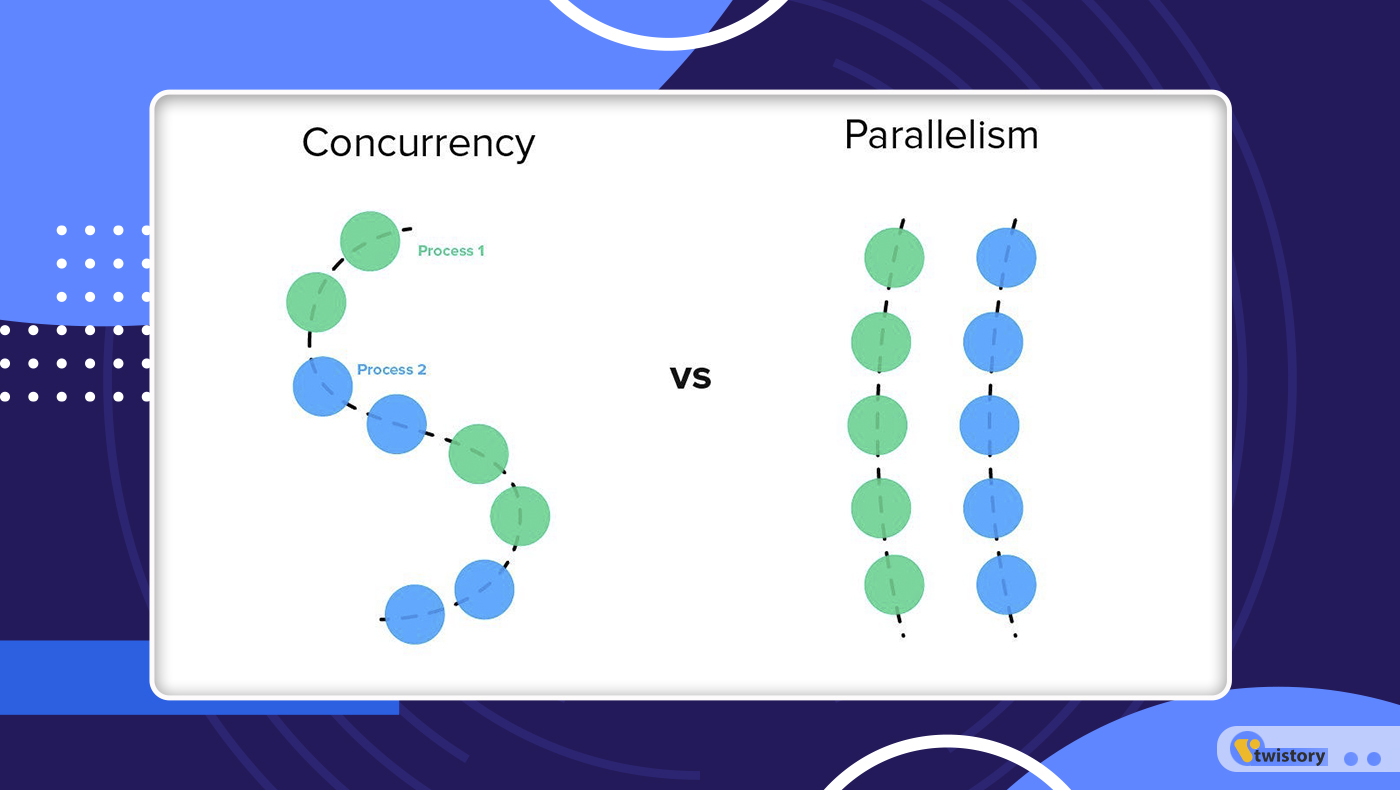

- Concurrency manages multiple computations on a single CPU through context switching, giving the appearance of simultaneous execution.

- Parallelism actually executes multiple computations at the same time using multiple CPUs, with each CPU dedicated to a different task.

Number of Processing Units Required:

- Concurrency can work with a single processing unit, like a single-core CPU.

- Parallelism needs multiple processing units for simultaneous task execution.

Control Flow Approach and Debugging:

- Concurrency comes with a non-deterministic control flow, leading to unpredictable execution order and more complex debugging.

- Parallelism features deterministic control flow, which eases debugging as the task execution order is more predictable.

Resource Management:

- Concurrency demands efficient resource management on a single processor, particularly in context switching, to maintain performance.

- Parallelism, with each core handling a distinct task, generally leads to faster execution and better performance with simpler resource management.

Fault Tolerance:

- In concurrency, a failure in one task could affect all processes on the processor, complicating error identification.

- Parallelism offers improved fault tolerance, as a failure in one core usually doesn’t impact the others, and redundancy is easier to implement.

Programming Model:

- Concurrency requires programming models with synchronization mechanisms like locks or semaphores for managing task execution, which can complicate the code.

- Parallel programming models tend to be simpler, avoiding such mechanisms due to the orderly task execution.

Memory Utilization:

- Concurrency might lead to higher memory overhead due to tracking multiple processes or threads.

- Parallelism can be more memory-efficient, minimizing the need for context switching.

Programming Paradigms:

- Concurrency involves asynchronous programming, which can add complexity to code and debugging.

- Parallel programming often focuses on collaborative tasks, relying on specialized libraries or frameworks for efficiency and scalability.

Granularity:

- Concurrency deals with finer granularity but may face performance issues due to frequent context switching.

- Parallelism handles larger tasks for better performance but might pose greater management challenges.

5.2 Performance Evaluation of Concurrency vs Parallelism

Concurrency and parallelism are vital concepts in computer science that often need differentiation. Concurrency allows running multiple computations simultaneously with a single processing unit. This is done by interleaving processes on the CPU, enhancing the amount of work completed concurrently. In contrast, parallelism executes multiple calculations at the same time across several CPUs. This deterministic control flow in parallelism improves system throughput and computational speed. Concurrency optimizes work completion, while parallelism increases throughput and speed. Ultimately, both concurrency and parallelism are distinct yet interrelated concepts that markedly influence computer systems’ performance and efficiency.

6. Combining Concurrency and Parallelism in Operating Systems

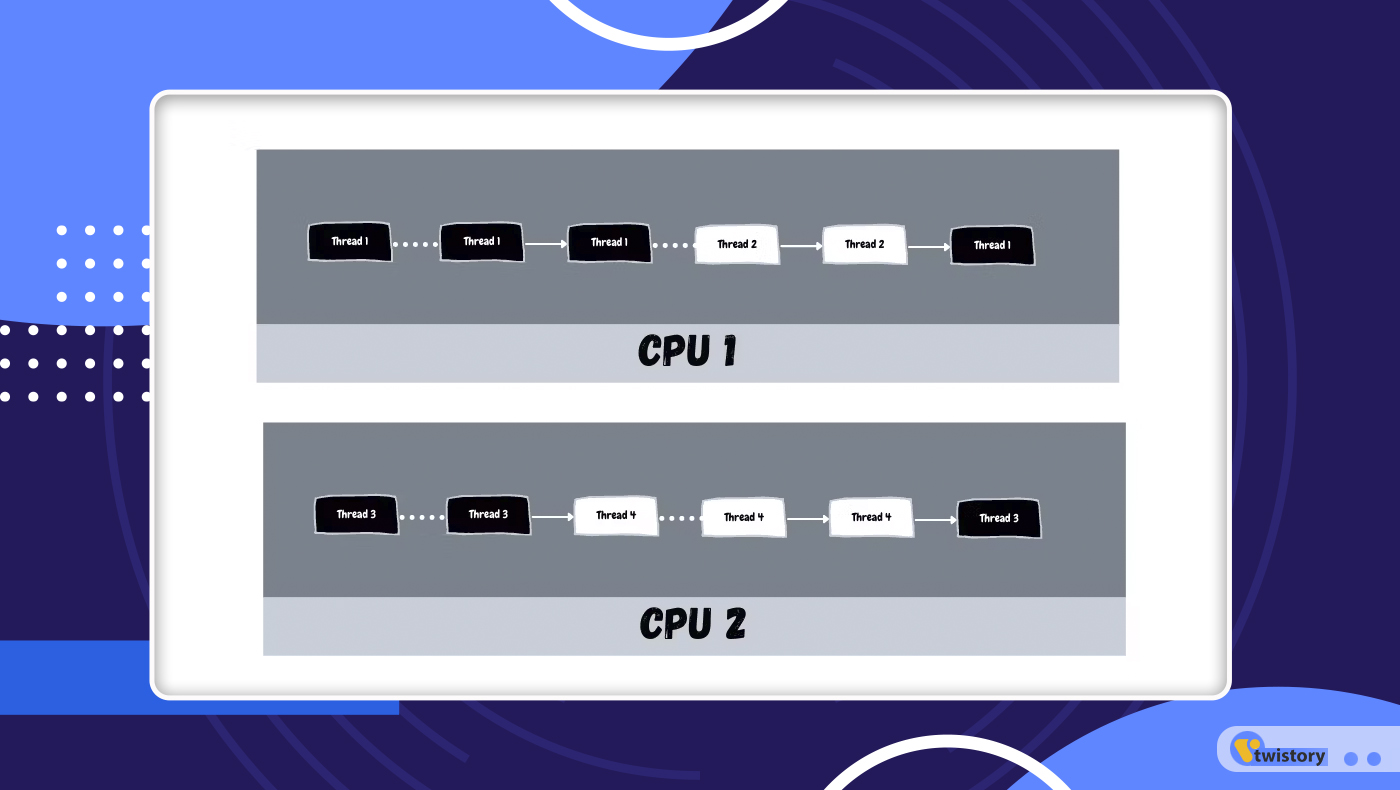

Let’s explore the concept of Parallel Concurrent Execution. This intriguing idea is a combination of keeping elements parallel and concurrent.

Imagine a scenario where multiple CPUs are busy running several threads simultaneously. This setup is a classic example of parallel concurrent execution. Each CPU is handling its own thread (or multiple threads), which is parallelism in action. At the same time, within each CPU, these threads may be managed in a concurrent manner, where the CPU juggles between them to optimize processing time and resource use.

7. Evaluating and Choosing Between Concurrency and Parallelism

Evaluating whether to use concurrency or parallelism hinges on several factors, such as the task’s nature, the system’s architecture, performance needs, and available resources. Here’s the analysis to help you make an informed choice:

Nature of the Task:

- Concurrency shines in scenarios with tasks that involve waiting, like I/O operations. Here, tasks can overlap in time without needing to run at the same moment.

- Parallelism is ideal for tasks that are heavy on computation and can be split and executed at the same time, thus cutting down the total processing time.

System Architecture and Resources:

- In a system sporting a single processor or a limited number of cores, concurrency can efficiently juggle multiple tasks.

- Systems equipped with multi-core processors are ripe for parallelism, enabling genuine simultaneous task execution.

Performance Requirements:

- If your aim is to boost responsiveness and manage multiple tasks or requests effectively, concurrency is your go-to.

- For rapid execution of large-scale, computation-heavy tasks, parallelism is the better fit, as it can drastically slash execution times.

Complexity and Development Effort:

- Concurrency might introduce complexity due to unpredictable task execution and the necessity for synchronization mechanisms.

- Parallelism, though simpler in flow control, demands thoughtful task division and managing inter-process communications.

Scalability Needs:

- Concurrency scales nicely in settings with numerous small, independent tasks, particularly in networked or distributed environments.

- Parallelism excels in scaling for high-performance computing tasks, where workloads can be distributed across multiple processors.

Debugging and Maintenance:

- Debugging concurrent applications can be tricky, with pitfalls like deadlocks and race conditions.

- Parallel applications, despite a more linear execution path, still need meticulous design to sidestep issues like data contention.

Resource Utilization:

- Concurrency might incur higher overhead due to context switching and memory demands.

- Parallelism, leveraging dedicated resources, can reach greater efficiency and performance but often calls for more investment in hardware.”

8. Conclusion

In conclusion, the distinction between Concurrency vs Parallelism plays a vital role in contemporary computing. Concurrency is effective for juggling multiple tasks in systems with fewer processors, while parallelism excels in environments with multiple processors, enhancing task completion speed. Grasping their individual advantages is key for maximizing system efficiency.

For further exploration into tech topics, visit Twistory. Our blog is rich with detailed analyses and information across a range of technological subjects, ensuring you remain informed in the swiftly evolving digital era. Keep an eye out for more enlightening and informative articles!